Getting Physical: Extreme abuse of Intel based Paging Systems - Part 1

Hi, After Enrique Elias Nissim (@kiqueNissim) and I presented "Getting Physical: Extreme abuse of Intel based Paging Systems" at CanSecWest2016, I decided to write a series of blog posts explaining in detail what we presented and show what we couldn't in a full time talk (50 minutes of presentation is a lot but not in this case !). The idea of these blog posts is to explain how the Windows/Linux Paging System is implemented and how they can be abused by kernel exploits.

Current Kernel Windows Protections

Since Windows Vista, Microsoft has implemented a series of Windows kernel protections with the idea of mitigating, directly or indirectly, some types of kernel exploitations. Among the most well known protections, we can name DEP/NX, KASLR, SMEP and Integrity Levels.

DEP/NX: With the appearance of the NX bit (Non eXecutable) in the Intel processors and its use since "Windows XP" SP2, a new feature called "Data Execute Prevention" appeared. This feature prevents code execution in DATA areas like STACK, HEAP, etc. Although at first it was only used to protect USER SPACE programs, it was later used to protect some KERNEL SPACE areas by avoiding DATA execution in the Windows kernel. It's necessary to say that Intel started to support CODE and DATA isolation since the 80286 CPU, through the PROTECTED MODE MEMORY SEGMENTATION, although this was misused by most operating systems. The memory model chosen by most was the FLAT-MODEL, where code and data are overlapped, simplifying a lot the memory management. As a result of this poor design, the ridiculous idea of data execution was introduced. Unfortunately, a concept not very intuitive for developers far away of low-level programming and secure programming.

KASLR: Since Windows Vista, kernel and drivers base addresses have been randomized. Probably, it's the most effective mitigation. The greatest effectiveness of this protection can be seen in kernel remote exploits, where it's not possible to predict, in a deterministic way, where kernel and drivers are. The only way to get successful attacks is through memory leaks, where it's necessary to exploit a second vulnerability. On the other hand, in local scenarios (Privilege Escalation exploits), this protection is not very effective because it's possible to get kernel addresses by calling the "NtQuerySystemInformation" function.

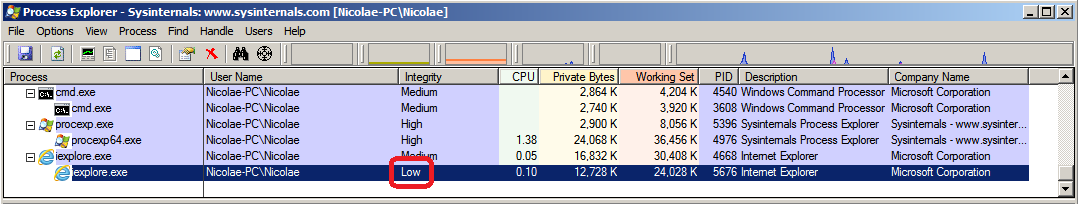

Integrity Levels: It has been implemented since Windows Vista. Talking specifically about kernel exploit mitigation, this protection has become more effective since Windows 8.1. Most applications run in Medium Integrity Level, which means that if they are exploited, it's "relatively" easy to pivot from it and escalate privileges through a kernel vulnerability to get system privileges. On the other hand, there are some applications like "Internet Explorer" or "Google Chrome" running under a higher security level, where the integrity level is set as "Low".

In this case, if any of them are exploited and the attacker wants to escalate privileges through a kernel exploit, this protection becomes effective, because it's not possible to get kernel addresses by calling the "NtQuerySystemInformation" function. This is because some call restrictions were implemented.

SMEP: It means "Supervisor Mode Execution Prevention". It has been implemented by Intel CPUs since the "Ivy Bridge" processors line and it has been supported since Windows 8. The idea of this feature was to separate executable KERNEL SPACE from executable USER SPACE. So, if this protection is enabled, only code located in kernel space can be executed in KERNEL MODE. This means that, if through a kernel vulnerability the EIP/RIP register is redirected to user space, the CPU will raise an exception. In that way, it's not possible to jump directly to user space code mapped by the exploit, something that was pretty common some years ago or even today in old architectures. Summing up, the combination of these four protections make Windows kernel exploitation quite tricky, specifically in Windows 8.1 and Windows 10, forcing exploit writers to improve their techniques or find new ones.

Arbitrary Write - Concept

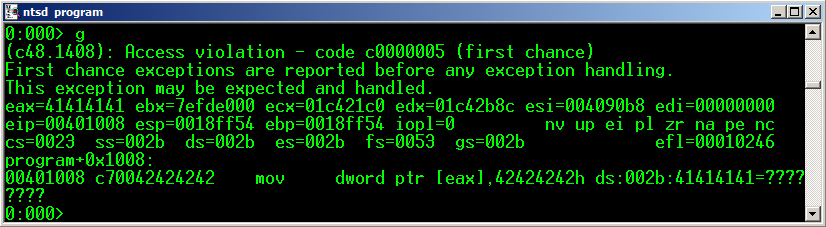

Arbitrary write is the result of having successfully exploited some kind of binary bug. As a result, we can write partially or totally a value where we want in memory (write-what-where).

This is not always true, and sometimes arbitrary writes end up writing non controlled values. But fortunately for exploit writers, these values almost always have specific patterns. In this blog post series we will focus in vulnerabilities where the final result is always an arbitrary write.

Intel Paging - Some history

Memory paging support appeared in 1985 with the 80386 CPU (i386) together with the 32-bit instruction set and the 32-bit memory address support. From 16 to 32 bits, the addressable PHYSICAL memory grew from 16MB to 4GB. On the other hand, the addressable VIRTUAL memory grew from 64KB to 4GB. It's easy to see with an instructions like "mov". Many years ago, we assembled instructions like "mov ax,ds:[0x1234]" (16-bit operand) and then we were able to assemble instructions like "mov eax,ds:[0x12345678]" (32-bit operand). It's clear that no 30386 supported 4GB of physical memory at that time, so, the trick to read/write/execute high memory addresses was in the virtual memory mechanism. Ten years later (1995) the support for PAE (Physical Address Extension) appeared with the release of the Pentium Pro, where 32-bit CPUs were able to address up to 64GB (36 bits) of physical memory. It was a big leap in the OS memory management, specially in production servers, where the memory swapping was notably reduced and the computer's performance improved considerably. Finally, AMD introduced the Opteron processor in 2003. It was the first 64 bit CPU with x86/x64 instruction set support.

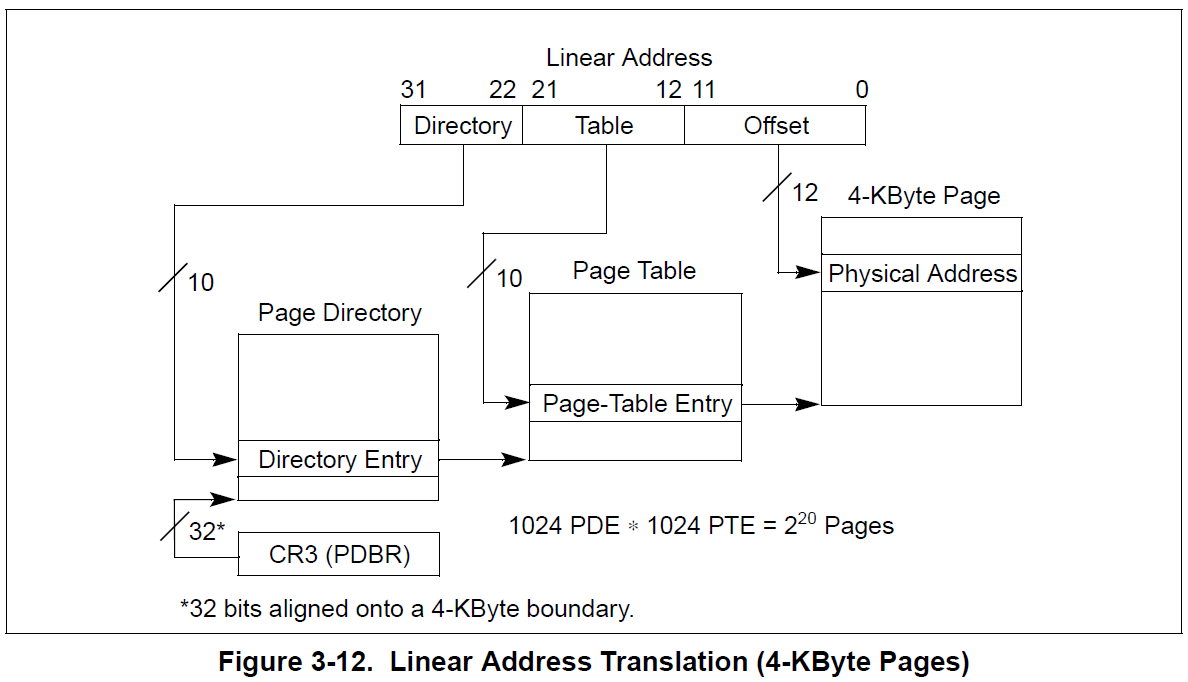

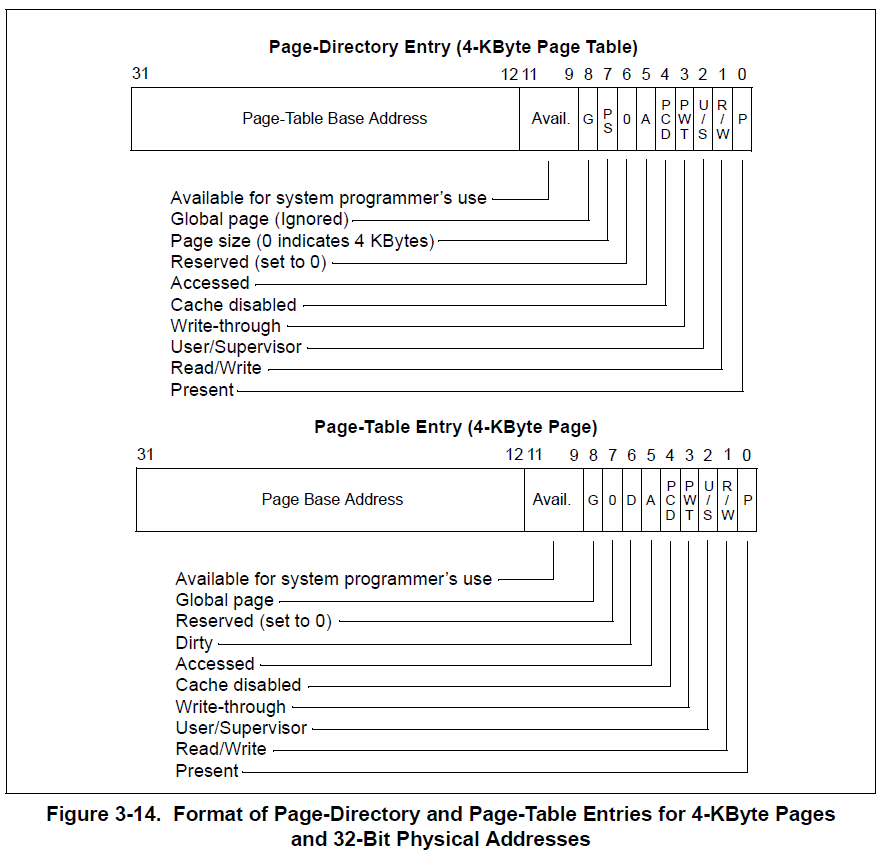

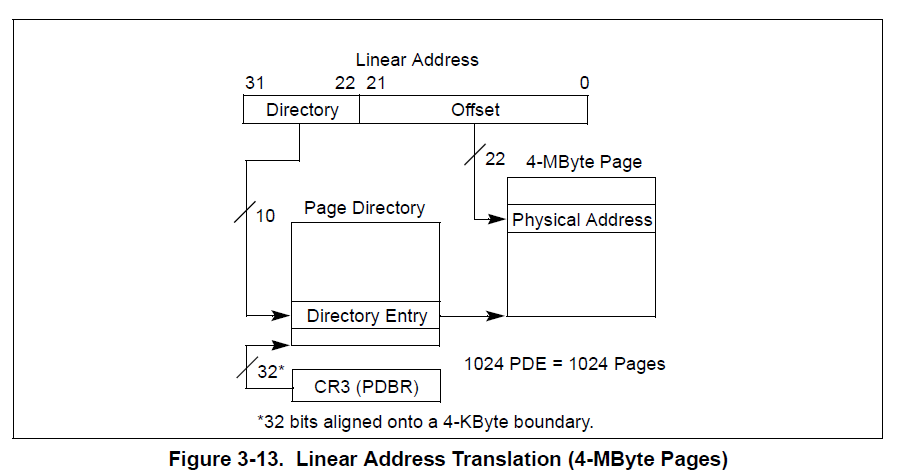

Intel Paging - x86

On 32 bits, the CPU can address up to 4GB of virtual memory. To be able to do so, there are two paging table levels called PAGE DIRECTORY and PAGE TABLE. When the virtual memory was introduced by the 80386 CPU, a new register called CR3 appeared. This register is only accessible by ring-0 (kernel mode) and it points to the PHYSICAL ADDRESS of the current PAGE DIRECTORY. Every PAGE DIRECTORY has 1024 entries, where each entry (called PDE), points to one PAGE TABLE PHYSICAL ADDRESS. Every PDE can be used to map up to 4MB of virtual memory, if all PAGE TABLE entries are used. Every PAGE TABLE has 1024 entries, where each entry (called PTE), points to one PHYSICAL ADDRESS memory page. Every PTE addresses 4KB; this means that it maps 4KB of physical memory to 4KB of virtual memory.

If we do the following calculation: 1024 PDEs * 1024 PTEs * 4KB, we get the maximum virtual memory addressed by the CPU, 4 gigabytes. In both paging table levels, the ENTRY size is 4 bytes.

There is a variant for PAGE DIRECTORY ENTRIES, where instead of pointing to PAGE TABLES, they can point to LARGE PAGES. A large page is a PDE that maps 4MB of contiguous physical memory. It's not necessary to use a full page table. The way to set a PDE as LARGE PAGE is by enabling the PS bit (Page Size).

It's important to say that, in most operating systems, every current process has it's own memory space, where one process can address 4GB of virtual memory, independently of the rest of other running processes. On the other hand, no process can read or write the memory space of another. To reach this process memory isolation it is necessary that every single process has it's own paging structures, where the PHYSICAL ADDRESS pointed by every paging table must be unique per process, except in the cases where the OS intentionally shares some memory regions like libraries (DLLs).

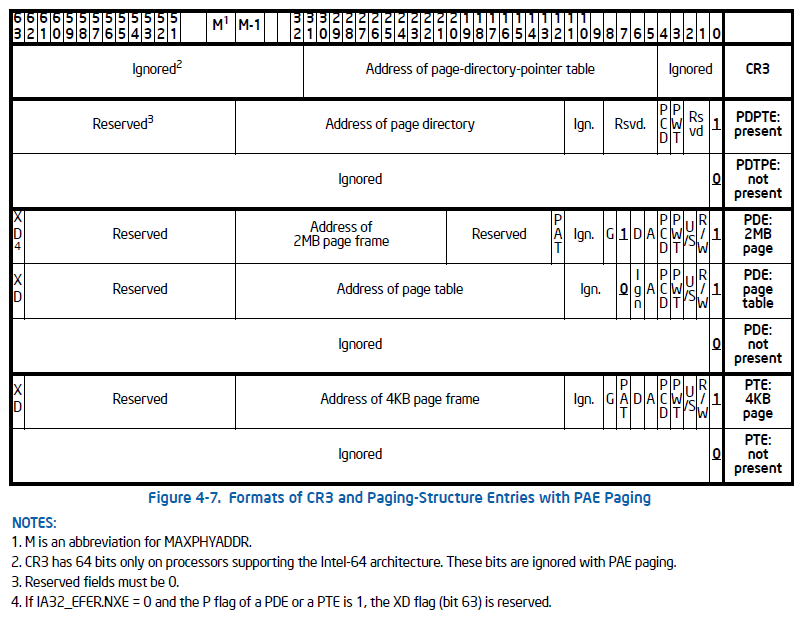

Intel Paging - x86 + PAE

When PAE (Physical Address Extension) was introduced, a higher paging table level was added. It was called PDPT (Page Directory Pointer Table). With the introduction of this new table, the CPU incremented its addressable physical memory up to 64GB, since it started to use 36 bits for physical addresses. On the other hand, as the 32-bit instruction set hadn't changed, the memory limit for virtual addresses was kept in 32 bits. Every entry in this table, called PDPTE, points to a PAGE DIRECTORY which is able to map up to 1GB of virtual memory. All paging entries grew from 4 to 8 bytes, which reduced by half the number of entries in each table, from 1024 to 512 entries. The 1GB addressable memory space can be obtained from the next calculation: 512 PDEs * 512 PTEs * 4KB Apart from the memory management improvements, PAE introduced a new security feature, the NX bit (XD by Intel). Nowadays, this security feature is used by most modern operating systems as an exploit mitigation.

This bit, located at the highest 8 byte position (63th), finished paying off Intel's debt for virtual memory protections, because before that, it was possible to set memory as read-only or read-write, but nothing to set it as EXECUTABLE or NOT. The default Intel behavior is to implicitly set all memory pages as executable, regardless of these memory pages being read-only or read-write, something bad from the security perspective.

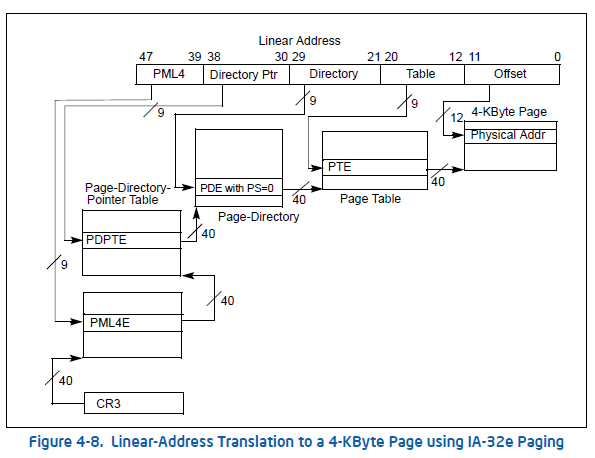

Intel Paging - x64

In 64 bits, the CPU can address by default up to 48 bits of physical memory, which is 256 terabytes. For this one, the paging structure entry size is 8 bytes which includes the NX/XD bit. On the other hand, the theoretical virtual address is 2^64 (1,677,721 terabytes or 16 exabytes), although, due to the 48-bit physical address limitation, the CANONICAL ADDRESS concept was adopted. The real virtual address range is only 256 terabytes (48 bits), leaving the rest of the memory inaccessible. A valid virtual address falls into one of two ranges: 0 to 7FFF'FFFFFFFF (512GB * 256), or FFFF8000'00000000 to FFFFFFFF'FFFFFFFF (512GB * 256). To be able to map 256 terabytes of virtual memory, AMD decided to use 4 paging table levels called: PML4, PDPT, PAGE DIRECTORY and PAGE TABLE.

Knowing that every table has 512 entries, we can make the next calculation: 512 PML4Es * 512 PDPTEs * 512 PDEs * 512 PTEs * 4KB = 256TB !

The problem of the Paging structure management

As I said before, the virtual memory is mapped by using paging tables that point to physical memory addresses. Both in 32 and 64 bits, higher tables point to physical addresses of the lower tables, and so on until the lowest level, where physical addresses are mapped as virtual addresses.

Now, it's necessary to understand that the operating system has to manage these tables, either to map, unmap or change some virtual memory protections, for user or kernel mode. So, now a not common but interesting question appears, how do operating systems manage these tables? Well, it's logical to think that the operating system's MMU has to reserve virtual memory to be able to address these paging tables located at physical addresses, since it's the only way that the CPU can read/write/execute memory when paging is enabled.

At the same time, it's necessary to have more tables to map this reserved virtual memory, and so on...Let's see this with a 64 bits example:

- A user wants to map/allocate at virtual address 0x401000, so he calls an allocator function (Eg. VirtualAlloc in Windows)

- The operating system's MMU has to map the virtual address 0x401000

- For this to happen, it has to create a PAGE TABLE, let's choose the physical address 0x1000000.

- To be able to manage this new PAGE TABLE, it would need a virtual address, let's choose 0x80000000.

- To be able to map the virtual address 0x80000000, a SECOND page table is necessary , let's choose the physical address 0x1000000+0x1000.

- To be able to manage this new SECOND page table, it would need a SECOND virtual address, let's chose 0x80000000+0x1000.

- Repeat this process to infinity...

It's clear that this way is not feasible, and operating systems have implemented an efficient ways of managing paging tables. The purpose of this blog posts series is to explain what Windows and Linux have decided to implement and what security problems they have.